Postgres on Kubernetes for the Reluctant DBA

Slides and transcript from my talk, "Postgres on Kubernetes for the Reluctant DBA", at Data on Kubernetes Day Europe in London on 1 April 2025.

Introduction

This is me!

As you can see from the diagram representing my career so far (and as you already know if you've read my posts or watched my talks before),

I have a database background.

I was a DBA for 20 years before I moved into database consultancy, and I’m now a senior solutions architect at Crunchy Data,

working with customers to design, implement and manage their database environments, almost exclusively on Kubernetes.

Over the past few years, I’ve given a lot of talks about running Postgres on Kubernetes,

and I work with a lot of customers who are at various different points on their databases on Kubernetes journey.

The questions from the audience, and hallway conversations at conferences are always interesting, and tend to come from one of 2 groups of people:

- People who are deep into running databases on kubernetes and are looking for answers to some tricky technical issue or architectural question.

- Self-proclaimed “old-school DBAs” who still aren’t convinced that running databases in containers is a good idea.

I prepared this talk especially for that 2nd group of people, so I hope some of you are in the audience today!

And don’t forget, as you saw on the previous slide, I come from an old-school DBAs background, so I’ve gone through that process.

To get an idea of who was in the audience, I asked the question

What’s your main area of responsibility in your current role?

There was a reasonably even split betweeen:

- Databases

- System or Platform Administration

- Devops or Automation

- Development

The plan for the session was:

- Some Databases on Kubernetes background.

- Some audience participation.*

- A look at some of the main concerns that DBAs have about running DBs on Kubernetes.

- Some of the challenges you might encounter, and how you can overcome them.

- The strengths of databases on Kubernetes - the positives for you, the DBA.

- And finally, look at how can you get started and build confidence.

* In fact, there was so much audience participation, and I was enjoying it so much, that the rest of the agenda went slightly off the rails. I've included all of the missed slides and the things I planned to say in this post to make up for it!

Background

This is a slide that I used in previous talks to discuss the evolution of database architecture,

and you can find a similar diagram in the Kubernetes documentation.

This is obviously completely oversimplified, and not entirely accurate!

Firstly, it gives the impression that there has been a gradual, linear migration from bare metal via VMs to containerised environments.

But there are still databases being deployed on bare metal and on VMs, often for good reasons.

Also, rather than just showing containers, it would be much more accurate to show Kubernetes,

because I don’t know of anyone brave enough to deploy enteroruse-scale containerised databases without container orchestration.

But the main thing that stands out as inaccurate for me is the suggestion that this shift to containerised databases has been smooth and simple.

Kubernetes is already 10 years old and there’s been a massive shift towards it in that time. It may originally have been created with stateless apps in mind, but data on Kubernetes wasn’t far behind:

- With introduction of support for the Kubernetes Operator, and Features for Stateful Sets in 2016, a production database workload in Kubernetes was beginning to be realistic.

- The Crunchy Data Postgres Operator was released in 2017 and was followed by other database operators. The landscape is still growing and evolving.

- The DoKC was launched in 2020 to “advance the use of Kubernetes for stateful and data workloads, and unite the ecosystem around data on K8s”.

- By 2022 the DoKC said that 70% of companies were running stateful workloads on kubernetes in production.

And the 2024 DoKC report says that databases remain the #1 workload on kubernetes, highlighting the platform’s "reliability for mission-critical workloads".

Audience participation

I wanted to know more about the audience's experience with running databases on Kubernetes. Their worries if they weren't already running databases on Kubernetes, and the challenges that they are either currently facing or have previously faced if they are already running databases on Kubernetes. The answers were really interesting, so I've shared them here.

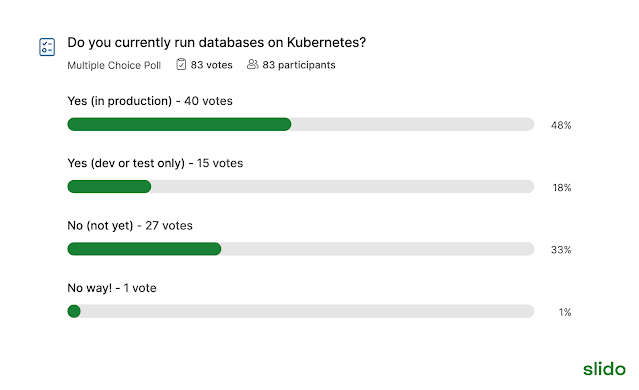

Almost half of the audience are already running databases on Kubernetes in production, and only 1% (1 person) said "No way!".

Surprisingly, to me at least, performance came out as the most common thing holding people back from databases on Kubernetes. Major upgrades, lack of experience, stability and data loss also ranked highly as concerns.

Backups were expressed as the biggest challenge for those already running databases on Kubernetes.

DBA Concerns, Worries & Fears

This section of DBA concerns is based on the conversations I’ve had with lots of kubernetes-shy DBAs. It's interesting to see the differences between this list and the audience's responses. The next version of this talk will have to be updated to take those into account.

Many DBAs are, understandably, not all that keen on the stateless and ephemeral nature of containers.

We all know that containers have a lot of great features - isolated, lightweight, portable etc.

and that they allow scalable and flexible architectures.

But trusting your data to something designed to be stateless and temporary?

That goes against everything you’ve ever been taught as a DBA!

You may also be wondering:

- If we’re all running databases on Kubernetes, will anyone even need DBAs anymore? (spoiler: yes)

- Kubernetes is just for stateless apps, isn’t it?

- How can I be sure my data will be safe?

- What about the years I’ve spent learning skills, developing tools and scripts and honing techniques? Will I have to throw that away and start again?

- I don’t have the time to learn yet another complex technology.

- I don’t even know where to start or who can help me if I get stuck.

The goal for the rest of the talk is to allay some of those fears, and if you still have questions, I'm always happy to chat to anyone about databases on Kubernetes - just reach out!

For last month’s DoKC Town Hall, Lori Lorusso moderated a discussion between myself, Lætitia Avrot and Frances Thai about “Demystifying Databases on Kubernetes. Lætitia said that there are two types of people who want to run databases on Kubernetes:

- DBAs who need to be convinced that Kubernetes is stable enough and won’t mess up their databases.

- Devs who are ready to run everything in Kubernetes and don’t understand why DBAs are so conservative.

Because, let’s face it, DBAs are risk averse and change averse. And for very good reason. In general, databases aren’t supposed to be exciting or innovative. They’re supposed to keep your data safe and secure and make sure it’s accessible when you want to query it.

If there’s any kind of excitement around a database, it usually means something’s gone horribly wrong.

As I said in the abstract for this talk: If you’ve spent decades learning your trade, honing your database expertise and putting together your database administration toolbox, and you’re confident in your ability to implement and maintain a reliable, secure, performant database environment, why would you risk upsetting that by migrating to a completely different architecture?

Challenges of Databases on Kubernetes

What are some of the challenges of running databases on Kubernetes? How can we overcome them?

- Kubernetes is a new technology (certainly compared to bare metal servers or VMs) and it introduces complexity and therefore risk.

- You may not yet have team members who have the knowledge and the confidence to work with Kubernetes.

- Things need to be done in (slightly) different ways.

- A “lift and shift” approach may not work for all of your databases/database applications. Some database applications may be better built from the ground-up in a cloud-native way.

- The business may need additional reassurances. You may need additional reassurances

- Perhaps one of the most important challenges is that kubernetes itself doesn’t inherently know how to manage postgres. Or any other database system.

If you start by thinking “I’ve got to move all my databases to Kubernetes”,

you’ll probably be wondering how on earth you’re going to manage that particular balancing act.

Especially if you don’t even know how or where to start.

As a DBA, you’re already juggling enough things!

Focus on the things that are actually in your remit, the things that it makes sense for you to spend time and energy on.

You don’t need to be a Kubernetes expert. You may have a Kubernetes team in-house,

or you may want to consider one of the managed Kubernetess platforms out there.

Don’t try migrating all of your databases to kubernetes in one go.

You may even find that it doesn’t make sense to migrate some of your databases;

it’s obviously all going to be a cost-benefit exercise.

Many people find success by starting out with a small new project.

You can build confidence by migrating some of your less critical database applications and monitoring those for a while

to give everyone chance to get used to the new reality.

Strengths of Databases on Kubernetes

What are the positives of running databases on Kubernetes? What does it bring you as a DBA?

I like to turn this around and look at some of the challenges of running databases without Kubernetes.

One of the main challenges of managing databases in general is the sheer scale of tasks that a DBA is responsible for.

And DBA teams are looking after more and more databases, containing more and more data, and processing more and more complex transactions.

As a DBA, you’re probably responsible many of these things, and possibly more besides.

I’m sure you don’t need me to read the list - you know better than I do how long your to-do list is!

You’re probably busy All. The. Time.

As mentioned in the list of challenges, Kubernetes itself doesn’t know how to manage a database. It doesn't know how to:

- Put a high availability Postgres architecture in place.

- Monitor your databases.

- Take backups.

- Perform a database point in time recovery.

- Upgrade your database cluster...

If you're both a Postgres expert and a Kubernetes expert, you can probably cobble something together that will do all of that, just as you’ve done in existing bare metal or VM environments. But as we've already said, as a DBA, you already have enough on your plate, and becoming a Kubernetes expert probably isn’t on the cards.

This is where the Kubernetes Operator comes in. As a reminder, an Operator extends Kubernetes, using custom resources and the control loop to work to keep your environment in the state you declare.

As a busy DBA, it’s difficult to fix things permanently, improve procedures, fully automate things, avoid the issues that cause the 2am callouts, keep up to date with upgrades…

And DBAs are a bit like gold dust, so it’s not easy to just go out and hire more if you need more resources. Anything that can help with some of that has to be a good thing!

Even a fairly simple database architecture needs:

- A primary and replica databases in one or more data centers.

- HA with tools such as Patroni and etcd.

- Backup and recovery with pgBackrest.

- A backup repository in the cloud to enable replication between the two data centers…

Then you need to add in things like monitoring, connection pooling, PGAdmin, primary and replica database services, maybe with HAProxy, authentication, certificate management…

And just the monitoring stack that was represented by a simple rectangle in that previous diagram probably looks more like this, with an exporter, metrics collector, alerting tool, dashboards etc.

If you’ve already had to set up this kind of environment either by hand or by scripts, or even using tools like Terraform and Ansible, you’ll know how time consuming it is, how fiddly it is. That there’s always something that doesn’t quite match between the dev, test and prod environments, and that it’s very difficult to get right even once, let alone every single time.

Kubernetes automates the things that would otherwise make managing large numbers of containers such a headache, and includes a lot of the features that you need to manage a large-scale database environment. Including, in addition to all of the provisioning, scheduling, self-healing, security, networking etc.:

- Persistent storage (with Persistent Volumes).

- Stateful sets (DBAs will like the word stateful!) - that are ideal for a HA database cluster where your primary and standby database pods shouldn’t be identical.

- Sidecars so you can deploy containers alongside your database container to do things like extracting metrics, managing backups etc.

The idea of a Kubernetes Operator is that it performs the tasks that a human operator would otherwise perform. In the case of a Postgres Operator, that’s the day to day tasks that a DBA would otherwise have to perform either manually or via scripts.

As well as the Crunchy Data Postgres Operator (that’s the one I can speak in detail about because it’s the one that I work with day to day), there are various other Postgres Operators, as well as operators for other database management systems. Some of these have now been tried and tested for years in production environments.

Each of these operators combines detailed Postgres and Kubernetes knowledge and expertise. You declare what your Postgres cluster should look like, then let the features of Kubernetes, the Operator logic, and the integrated tools, configure your cluster and work continually to keep it in the state you defined.

You get a database architecture that looks something like the one in this diagram created and managed for you, including all the parts that we mentioned earlier. Having all of that automated by an operator is a huge time-saver, and minimises error.

Backup and recovery is built in and automated, letting you backup locally and/or to cloud storage.

You get automated point in time recovery, either in place or clone to a new cluster, you can define schedules and retention periods in a single yaml file...

One part that still always seems like magic to me is the HA mechanism - you can delete the primary pod and watch the Kubernetes self-healing, the operator logic and the Patroni configuration work together to:

- Almost immediately promote a new primary database.

- Reconfigure the cluster to follow the new primary.

- Create a new database pod.

- Integrate the new database into the cluster as a replica.

There are all sorts of security features built in, and upgrades become much less scary because they’re completely automated (although you should still read the release notes and do some testing!)

- Many organisations have now been running mission-critical, multi-terabyte databases on Kubernetes for multiple years.

- Some of them look after many hundreds or even thousands of databases.

- The same team of DBAs can run many more databases than was previously possible.

- It’s easier than ever to implement internal DBaaS architectures to make database available to whoever needs them

I shared a couple of case studies as examples, just as reassurance that you can read about the experiences of big organisations, in all sorts of different domains, who are successfully and happily running Postgres on Kubernetes in production. Some of them at huge scale.

These are examples from the Crunchy Data website, and if you go to the websites of any of the other organisations that maintain a database operator or help customers to run databases on Kubernetes, I’m sure you’ll be able to find many more reassuring stories.

As we saw, there's lots of database expertise built into the operator.

But the operator is there to simplify your life as a DBA, not to replace you.

The idea is to remove the time-consuming, error-prone tasks that you want to be automated,

and hopefully some of the things that may otherwise cause you to be called at 3am because there’s a database down.

Rest assured, your database expertise is still needed, perhaps now more than ever, and you should have more time for the more interesting things such as:

- Strategy

- Architecture design

- Data modelling and design

- Sharing database knowledge with others

- Troubleshooting those tricky performance problems…

Getting Started and Building Confidence

How can you experience the magic and wonder of running Postgres on Kubernetes for yourself?

As Frances Thai said in our recent DoKC Town Hall Panel - there’s nothing to it but to do it! So how can you get started?

Learn “just enough” about Kubernetes. You don’t need to be an expert, but it’s really helpful to have an idea of how your database is interacting with the different elements, and how the basic components fit together.

You want to know about the control plane and the worker nodes, understand what a pod is, know what makes a stateful set different from a deployment, understand what a PV and a PVC is etc.

There are loads of Kubernetes training courses out there, but the Cluster Architecture section of the K8s documentation is a good place to start to get this type of understanding. You could also try out the tutorials there.

Once you have that basic K8s knowledge, it’s time to try out a Postgres Operator for Kubernetes.

Install the operator, create a cluster, and try out the different features. Delete your primary database and watch the magic as it recovers automatically.

There’s plenty of documentation, tutorials and videos out there to help. Have fun with it as you build your confidence, and don’t hesitate to ask questions.

This is a link to the get-started guide for PGO, the Crunchy Data Postgres Operator,

which gives you instructions, helm charts and Kustomize manifests to install the operator and create your first cluster.

I’m sure similar resources are available for other operators.

Connect with the data on kubernetes community: take advantage of the getting started guide and other resources on the web site,

join the slack channel and have a look at the 2024 DoKC report.

And most importantly, keep building that hands-on experience.

- The data on kubernetes trail has been blazed!

- It’s tried and tested in production and there are many organisations successfully running mission-critical, multi-terabyte databases on Kubernetes.

- Your database expertise is still relevant and necessary, possibly more so than ever in this age of ever growing databases.

- Embracing the automation and self-healing of Kubernetes will allow you to do more with less, and avoid some of those middle of the night crises.

- And you get to concentrate on the most interesting and fun parts of database administration.

Thank you for reading!

Get in touch, or follow me for more database chat:

LinkedIn: https://www.linkedin.com/in/karenhjex/

Mastodon: @karenhjex@mastodon.online

Bluesky: @karenhjex.bsky.social